So, this is one of the craziest things I’ve seen yet during a RAID data recovery case, and I just have to share it with the world.

I’ve handled many, many RAID cases including some very large complex ones, but this one was strange in a way I didn’t even think possible. I had to rack my brain to figure out how it is even possible to get what I found and I still have no idea how this impossible finding occurred.

I’m sure you guys who work in data recovery will enjoy reading this crazy case. It goes to show that there will never be an end of surprises in the data recovery field.

The Initial RAID Recovery Findings

So this case was a seemingly simple RAID 5 consisting of 3 SCSI drives. The drives were a little twitchy, but with a day of fussing around, we managed to image all but a couple of sectors of all three disks. So far so good.

Within just a few minutes I had determined the RAID settings. Drive order was 3, 2, 1, left-asymmetric parity rotation, and 32Kb block size. The partition table is found in sector 128 so we know we’ve got a small controller metadata area at the beginning of the disks. Nothing out of the ordinary so far.

I found two partitions allocated which allocate only about 30% of the array size. I assume there may be another virtual disk to look for after I figure everything else out.

After opening up the file systems of the two partitions, it’s clear that we have a badly out of sync drive. I quickly discover that it’s Disk 2 which is about 7 years out of sync with the other two disks. So I kick it out and recover out good data from the first two partitions. All good so far!

Now Things Get Crazy with This Raid Recovery

Assuming there might be another virtual disk, I scan the whole array using the found settings and an empty place-holder parity drive forDisk 2. The latter 70% of the array clearly shows data, but no real file system. I find no extra partition tables, or any real indication that there is another virtual disk.

So, I assume that it’s just old data from a prior array using these same disks. It is a very old system, so any number of things could have happened over the years.

Data is returned to the client, the case is paid for, and closed out….. or so I thought.

The Search For The Lost Virtual Disk

A few days later the client calls up saying that they are missing some critical data. He even analyzed some desktop shortcuts we’d recovered and discovered there was definitely a third logical volume. We had recovered his C: and D: but the missing data was on an E: and nowhere to be found in the recovered data.

So now I’m back on this case trying to find this lost virtual disk on the array. I want a happy customer after all and I certainly don’t plan on giving back the money paid.

Initially, I’m thinking that perhaps the stripe size is different or it’s a different RAID type used on the second VD (it’s rare to see, but some controllers can do this). All the while, I’m just using Disk 1 & 3 since I knew Disk 2 had been offline for the better part of a decade. But, all I found no matter how I built it was fragmented files and a handful of lost folders.

The Mystery RAID Case is Finally Solved

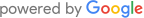

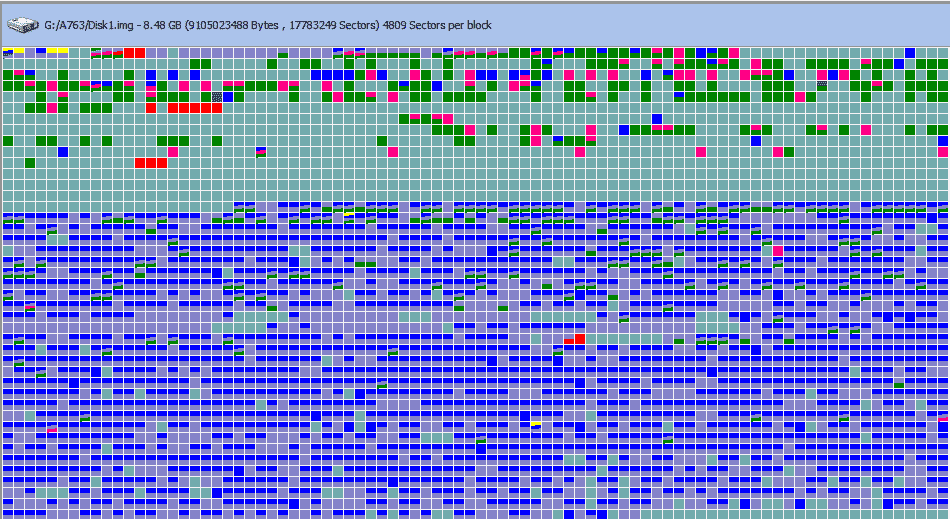

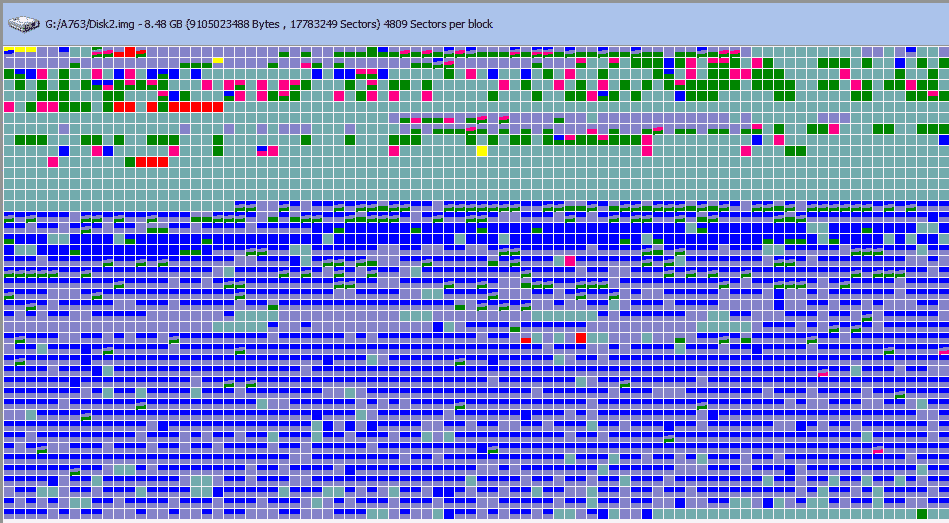

Finally, I decided to just scan the drives individually to see if I can find any NTFS starts, partition tables, etc. that might hold some clues for me. I happened to use R-Studio and notice what I found when I saw the visualization:

Sure enough, I built the array excluding Disk 3, scanned it again, and found another healthy file system in that area.

There are still a lot of mysteries here. Why was there no partition table anywhere on any disk pointing to this third volume/partition/whatever it is? That part is still a complete mystery.

It’s also a mystery how this RAID ended up limping along with one virtual disk on two drives and another virtual disk from the same RAID set was operating on a different two disks. So each VD had a different drive out of sync but of the same set of three physical drives.

My only guess as to how this happened is that at some point the array became degraded. Later, a virtual disk was deleted from the degraded array and a new virtual disk was created all while ignoring to address the degraded state. By that time the controller must have seen the failed disk as back online and then used it when creating that virtual disk.

I still have no idea how that VD could have then become degraded and continued to operate without the other VD dropping offline entirely. Maybe at some point they forced a drive back online to get it online again???

Somehow that’s what happened. Two degraded array virtual disks continuing to operate for years on a different set of online drives. It’s a total mystery to me, but fortunately, all data is now recovered.

Mystery solved!!! Well…sort of.